- Adaptive stray-light compensation in dynamic multi-projection mapping

- Adaptive Temporal Sampling for Volumetric Path Tracing of Medical Data

- Analytic Displacement Mapping using Hardware Tessellation

- Anisotropic Surface Based Deformation

- Auto-Calibration for Dynamic Multi-Projection Mapping on Arbitrary Surfaces

- Automated Heart Localization in Cardiac Cine MR Data

- Demo of Face2Face: Real-time Face Capture and Reenactment of RGB Videos

- Enhanced Sphere Tracing

- Evaluating the Usability of Recent Consumer-Grade 3D Input Devices

- Face2Face: Real-time Face Capture and Reenactment of RGB Videos

- FaceForge: Markerless Non-Rigid Face Multi-Projection Mapping

- FaceInCar: Real-time Dense Monocular Face Tracking of a Driver

- FaceVR: Real-Time Facial Reenactment and Eye Gaze Control in Virtual Reality

- GroPBS: Fast Solver for Implicit Electrostatics of Biomolecules

- Grundsätzliche Überlegungen zur Edition des Bestandes an Münzen der FAU als frei zugängliche Datenbank im WWW

- HeadOn: Real-time Reenactment of Human Portrait Videos

- Hierarchical Multi-Layer Screen-Space Ray Tracing

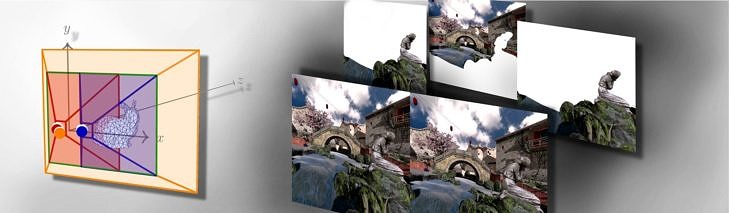

- Hybrid Mono-Stereo Rendering in Virtual Reality

- Interactive Model-based Reconstruction of the Human Head using an RGB-D Sensor

- Interactive Painting and Lighting in Dynamic Multi-Projection Mapping

- Learning Real-Time Ambient Occlusion from Distance Representations

- Low-Cost Real-Time 3D Reconstruction of Large-Scale Excavation Sites using an RGB-D Camera

- Multi-Layer Depth of Field Rendering with Tiled Splatting

- Multi-Resolution Attributes for Hardware Tessellated Objects

- Real-time 3D Reconstruction at Scale using Voxel Hashing

- Real-time Collision Detection for Dynamic Hardware Tessellated Objects

- Real-time Expression Transfer for Facial Reenactment

- Real-time Local Displacement using Dynamic GPU Memory Management

- Real-Time Pixel Luminance Optimization for Dynamic Multi-Projection Mapping

- Reality Forge: Interactive Dynamic Multi-Projection Mapping

- Robust Blending and Occlusion Compensation in Dynamic Multi-Projection Mapping

- Shape Adaptive Cut Lines

- Spherical Fibonacci Mapping

- State of the Art Report on Real-time Rendering with Hardware Tessellation

- Stray-Light Compensation in Dynamic Projection Mapping

- Visualization and Deformation Techniques for Entertainment and Training in Cultural Heritage

- VolumeDeform: Real-time Volumetric Non-rigid Reconstruction